Most people have heard of Moore’s Law, which broadly states that the number of transistors on a silicon chip will double every two years, with a corresponding increase in computing performance. This has proved true over the 50 plus years' life of the Law, contributing to huge improvements in technology, regarding speed, size, and cost.

Most people have heard of Moore’s Law, which broadly states that the number of transistors on a silicon chip will double every two years, with a corresponding increase in computing performance. This has proved true over the 50 plus years' life of the Law, contributing to huge improvements in technology, regarding speed, size, and cost.

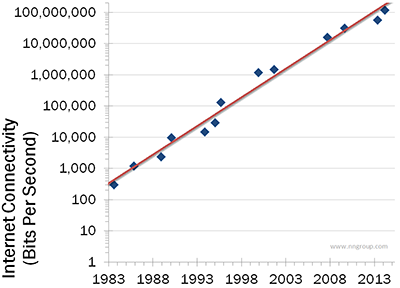

A lesser known theory is Nielsen’s Law, which applies similar thinking to network speeds. First quantified by Jakob Nielsen in 1998, it states that the bandwidth available to high-end broadband connections will grow by 50 per cent every year, leading to a 57x compound growth in capacity in a decade. The fact that it still holds true over 15 years later shows the strength of the model.

Nielsen based his idea on his own experiences of connection speeds, from a first 300 Kbps modem in 1984, through ISDN in 1996, all the way to 120 Mbps in 2014 - see image below ("Nielsen’s Law of Internet Bandwidth" by Jakob Nielsen on April 5, 1998).

Comparing the data points shows this constant growth over time, although, obviously, factors such as network investment, availability, and cost mean that not every user will be able to sign up to the fastest speeds.

Comparing the data points shows this constant growth over time, although, obviously, factors such as network investment, availability, and cost mean that not every user will be able to sign up to the fastest speeds.

Given the explosion in the number of internet connections, and the subsequent need for operators to update their infrastructure, the fact that Nielsen’s Law still stands is testament to the vital importance of internet connectivity to our daily lives.

Adding new infrastructure obviously has a cost implication, particularly when moving between technologies, such as from copper to fiber. Therefore, the question that many operators keep asking is: "How much bandwidth is enough?" In other words, will Nielsen’s Law continue to operate into the 2020s and beyond?

What is filling broadband connections?

The answer seems to be that it will, with demand accelerating as more and more consumers connect their devices to the internet. Take a typical family with two teenage kids. Everyone will have a smartphone and/or tablet, along with two or three PCs within the household. Add at least one smart, internet-connected TV or set-top box - potentially streaming 4K content - and you can already see the pressure on bandwidth and speeds. And this is before the widespread adoption of Internet of Things devices, such as sensors, security cameras, and other products that will all use the same broadband connection.

The good news for operators is that the technology is available to match Nielsen’s Law. Gigabit fiber to the home (FTTH) connections deliver sufficient bandwidth to subscribers to meet their needs (even as they grow in the near future). Nielsen’s Law has proved to be accurate since 1998 and, by my rough calculations, this means subscribers will require 1 Gbps (1,000,000,000 Bps) connections by 2019.

The only issue, as recent figures released by the FTTH Council Europe demonstrate, is that FTTH is still far from being ubiquitous. Despite an increase of 19 per cent in FTTH and fiber to the building (FTTB) subscribers over the last year, bringing the total to 17.9m across Europe, countries such as the UK, Ireland, Austria, and Belgium still have less than 1 per cent of their households connected to full fiber connections. By contrast, Spanish FTTH subscribers grew by 65 per cent. Overall, this is way behind areas such as Asia (where 70 per cent plus of South Koreans have fiber), showing that there is still room for improvement.

The latest fiber figures, and Nielsen’s Law, point to a need for operators to accelerate their fiber network deployments and find ways of reducing installation time and costs. Much of this is beginning to happen as technologies such as pushable fiber and pre-terminated cable drive the complexity out of last drop deployments, allowing greater use of non-skilled labor and faster installations. This is allowing operators to make FTTH deployments simpler and more economical, better meeting the needs of bandwidth-hungry consumers.

Looking into the future, whether Nielsen’s Law will survive for as long as Moore’s Law is difficult to predict. However, one thing is certain – capacity needs are ever-increasing, opening up new opportunities for operators to cost-effectively deploy fiber across their networks.